Anirudh (Ani) Ajith

Predoctoral Researcher @ Allen Institute for AI

Hello!

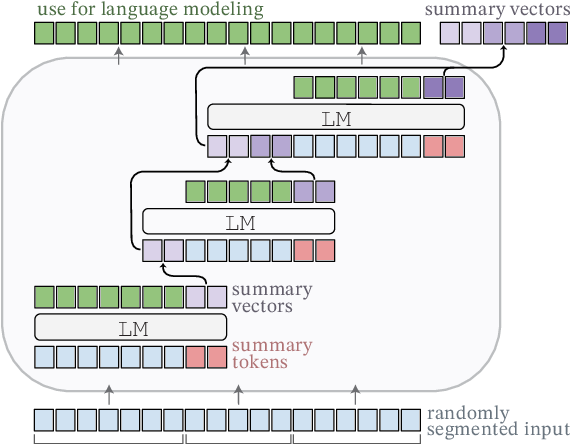

I am a predoctoral researcher at the Allen Institute for AI, where I work with Dr. Doug Downey on problems related to automating scientific discovery and predicting the trajectory of future science. Before joining Ai2, I graduated with a Master’s degree from Princeton University where I was fortunate to start my research career working with professors Karthik Narasimhan and Danqi Chen on LLM-related topics like in-context learning, context window expansion, detecting leakage of undesirable text into pretraining data, and retrieval. During my undergraduate studies at IIT Madras, I worked with Dr. Mitesh Khapra and Dr. Pratyush Kumar (as a member of AI4Bharat) on improving bitext mining for Indic languages.

Thus far, my research has primarily been focused on (1) expanding the set of problems that LLMs can solve by addressing their key contemporary limitations, and (2) developing principled techniques for ensuring that the real-world deployment of these systems can take place in socially responsible ways.

Going forward, I am excited to work on applications of LLM-based systems to scientific and mathematical domains, including (and especially!) frameworks that employ formal verification to bootstrap generation and aid in search. I want to help build systems that are (eventually) capable of superhuman mathematical theorem-proving, compositional multi-step reasoning, and can serve as valuable tools, or even partners in STEM research.

news

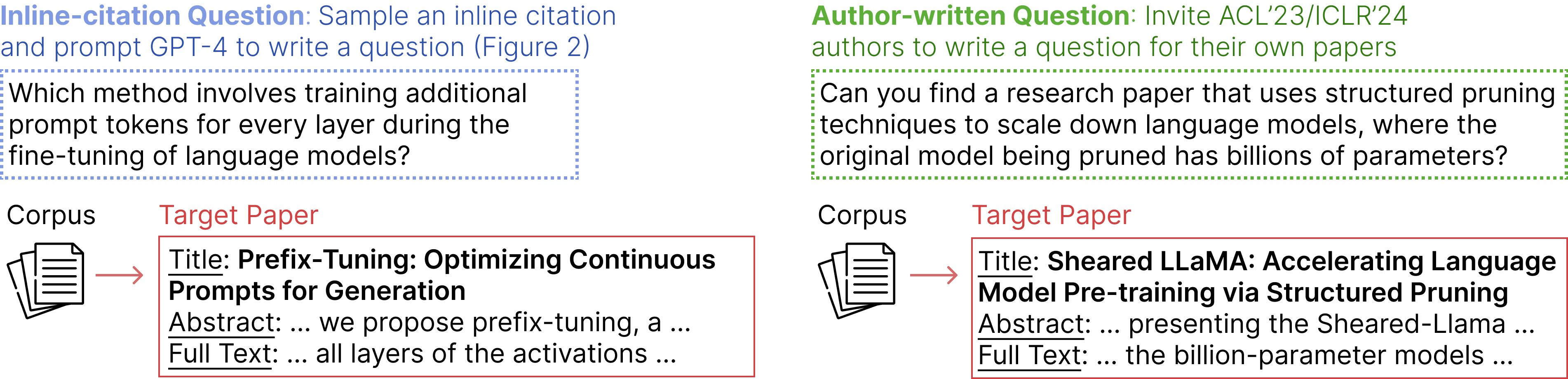

| Sep 20, 2024 | LitSearch: A Retrieval Benchmark for Scientific Literature Search has been accepted to EMNLP 2024! But wait, there’s more! Downstream Trade-offs of a Family of Text Watermarks has been accepted at EMNLP Findings 2024! |

|---|---|

| Aug 05, 2024 | I’ve started a position as a Predoctoral Investigator at the Allen Institute for Artificial Intelligence! |

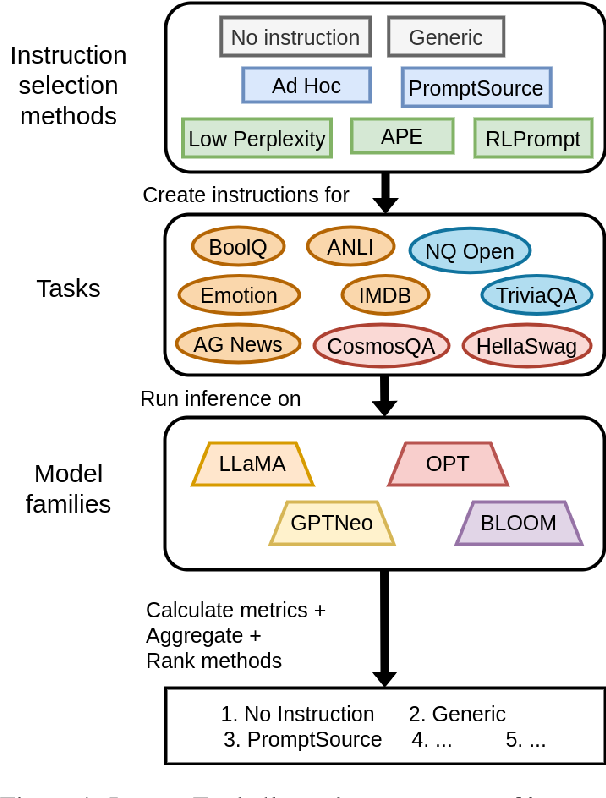

| Mar 14, 2024 | InstructEval: Systematic Evaluation of Instruction Selection Methods is accepted to Findings of NAACL 2024! |

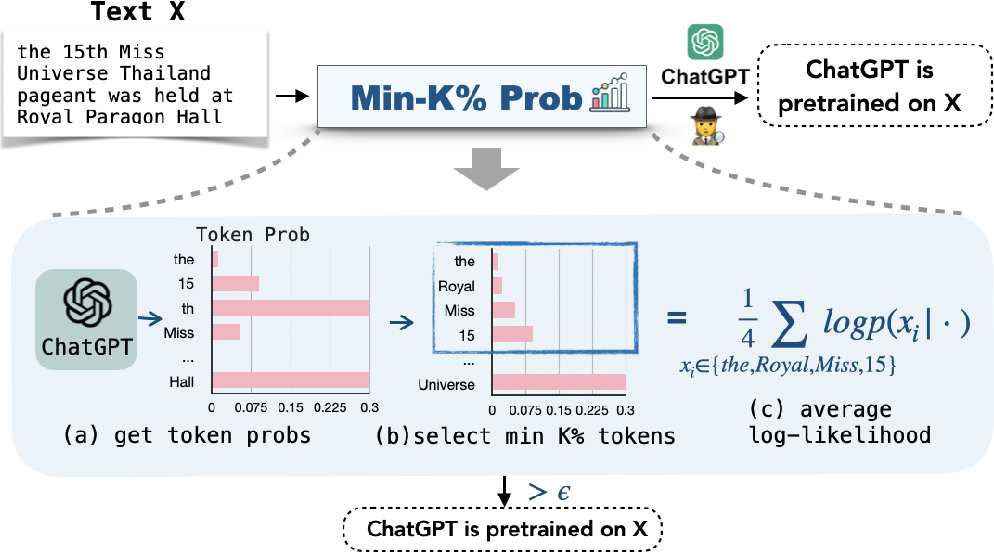

| Jan 15, 2024 | Detecting Pretraining Data from Large Language Models is accepted at ICLR 2024! |

| Oct 27, 2023 | Our paper InstructEval: Systematic Evaluation of Instruction Selection Methods earns a Spotlight acceptance at the R0-FoMo Workshop @ NeurIPS 2023! Also, our Detecting Pretraining Data from Large Language Models is accepted for an Oral presentation at the Regulatable ML Workshop @ NeurIPS 2023! |